NBC: Facial Recognition’s ‘Dirty Little Secret’ Is Using Millions of Pictures Without Consent

by Lucas Nolan, Breitbart:

NBC News notes in a recent article that the faces of millions of people are being used without their consent to program facial recognition software that could one day be used to spy on them.

NBC News notes in a recent article that the faces of millions of people are being used without their consent to program facial recognition software that could one day be used to spy on them.

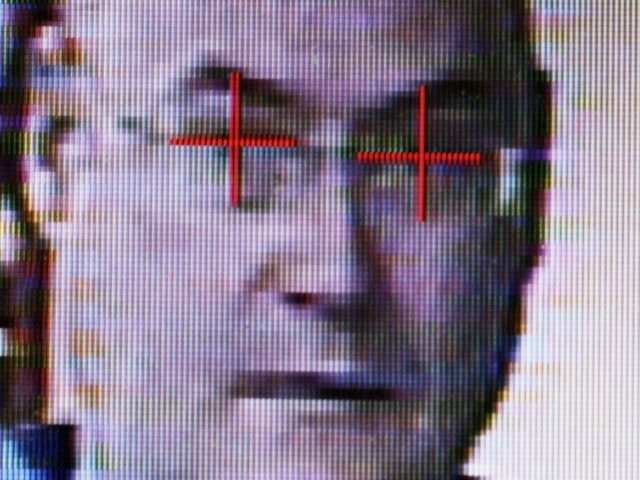

In an article titled “Facial Recognition’s ‘Dirty Little Secret’: Millions of Online Photos Scraped Without Consent,” NBC News discusses the moral issue surrounding facial recognition and the use of machine learning to analyze individuals faces in order to further develop these facial recognition systems. The article explains how facial recognition technology is programmed stating:

Facial recognition can log you into your iPhone, track criminals through crowds and identify loyal customers in stores.

The technology — which is imperfect but improving rapidly — is based on algorithms that learn how to recognize human faces and the hundreds of ways in which each one is unique.

To do this well, the algorithms must be fed hundreds of thousands of images of a diverse array of faces. Increasingly, those photos are coming from the internet, where they’re swept up by the millions without the knowledge of the people who posted them, categorized by age, gender, skin tone and dozens of other metrics, and shared with researchers at universities and companies.

However, one major ethical issue surrounding the programming of facial recognition is that the images used to help the machine learning algorithm “learn” human faces are often taken from publicly available photos.

As the algorithms get more advanced — meaning they are better able to identify women and people of color, a task they have historically struggled with — legal experts and civil rights advocates are sounding the alarm on researchers’ use of photos of ordinary people. These people’s faces are being used without their consent, in order to power technology that could eventually be used to surveil them.

That’s a particular concern for minorities who could be profiled and targeted, the experts and advocates say.

“This is the dirty little secret of AI training sets. Researchers often just grab whatever images are available in the wild,” said NYU School of Law professor Jason Schultz.

IBM has promised to remove people from its dataset, but discovering that an image of you may be used in its database is where things get tricky.

John Smith, who oversees AI research at IBM, said that the company was committed to “protecting the privacy of individuals” and “will work with anyone who requests a URL to be removed from the dataset.”

Despite IBM’s assurances that Flickr users can opt out of the database, NBC News discovered that it’s almost impossible to get photos removed. IBM requires photographers to email links to photos they want removed, but the company has not publicly shared the list of Flickr users and photos included in the dataset, so there is no easy way of finding out whose photos are included. IBM did not respond to questions about this process.

Loading...