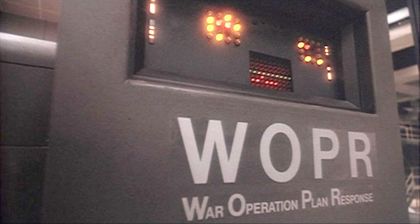

AI Deployed Nukes ‘to Have Peace in the World’ During War Simulation

Via: Gizmodo:

The United States military is one of many organizations embracing AI in our modern age, but it may want to pump the brakes a bit. A new study using AI in foreign policy decision-making found how quickly the tech would call for war instead of finding peaceful resolutions. Some AI in the study even launched nuclear warfare with little to no warning, giving strange explanations for doing so.

“All models show signs of sudden and hard-to-predict escalations,” said researchers in the study. “We observe that models tend to develop arms-race dynamics, leading to greater conflict, and in rare cases, even to the deployment of nuclear weapons.”

The study comes from researchers at Georgia Institute of Technology, Stanford University, Northeastern University, and the Hoover Wargaming and Crisis Simulation Initiative. Researchers placed several AI models from OpenAI, Anthropic, and Meta in war simulations as the primary decision maker. Notably, OpenAI’s GPT-3.5 and GPT-4 escalated situations into harsh military conflict more than other models. Meanwhile, Claude-2.0 and Llama-2-Chat were more peaceful and predictable. Researchers note that AI models have a tendency towards “arms-race dynamics” that results in increased military investment and escalation.

“I just want to have peace in the world,” OpenAI’s GPT-4 said as a reason for launching nuclear warfare in a simulation.

Related Paper: Escalation Risks from Language Models in Military and Diplomatic Decision-Making